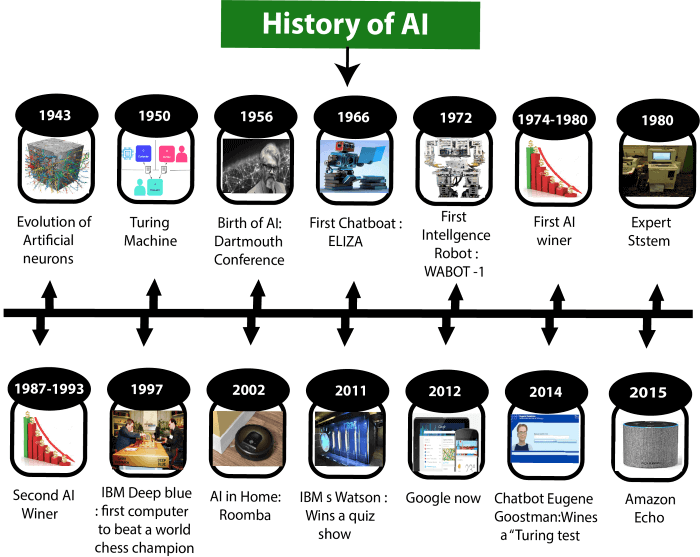

Artificial intelligence (AI) has come a long way since its inception in the 1950s. What was once the stuff of science fiction is now a rapidly evolving field with a wide range of practical applications. In this article, we’ll take a look at the history of AI and how it has progressed over the years.

The Early Days of AI

The concept of AI can be traced back to ancient Greek mythology, where the myth of Pygmalion told the story of a sculptor who created a statue that came to life. However, it wasn’t until the 1950s that the term “artificial intelligence” was coined, when a group of researchers at Dartmouth College held a conference to explore the possibility of creating machines that could think and learn like humans.

At the time, the primary focus of AI research was on creating machine learning algorithms that could recognize patterns in data. This led to the development of early AI programs such as ELIZA, which was capable of carrying on simple conversations with humans, and the first chess-playing computer program, which was able to beat human players. Chat GPT however is leaps and bounds ahead of the competition, a real innovation in AI technology, an insight into the brilliance we can expect in the future.

AI in the 1980s and 1990s

In the 1980s and 1990s, AI research continued to advance, with a focus on developing expert systems that could make decisions based on the rules and knowledge provided to them. These systems were used in a variety of industries, including healthcare and finance, to assist with tasks such as diagnosis and risk assessment.

During this time, AI also made its way into popular culture, with the release of movies such as “Blade Runner” and “The Terminator,” which depicted AI as a potential threat to humanity. This helped to raise awareness of AI and spark discussions about its potential uses and ethical considerations.

The Rise of Machine Learning

In the 21st century, the focus of AI research shifted to machine learning, which enables computers to learn and improve their performance without being explicitly programmed. This has led to significant advancements in fields such as image and speech recognition, natural language processing, and autonomous vehicles.

Machine learning has also played a significant role in the development of personal assistants such as Apple’s Siri and Amazon’s Alexa, which use AI to understand and respond to voice commands. In addition, machine learning has been used to improve online advertising, recommend products to customers, and even help diagnose medical conditions.

Currently there are hundreds of AI Tools at our disposal, with hundreds more being trained and developed for the future.

The Future of AI

The future of AI looks bright, with many experts predicting that it will continue to revolutionize a wide range of industries. Some of the potential uses of AI in the future include improving cybersecurity, optimizing supply chain management, and even helping to discover new drugs and treatments for diseases.

However, as with any new technology, there are also valid concerns about the potential negative impacts of AI, including the potential loss of jobs, bias in algorithms, and the possibility of misuse. It’s important that these issues be carefully considered as we continue to explore the possibilities of AI.

Overall, the history of AI is one of rapid progress and innovation. From its early days as a field of research to its current state as a rapidly evolving technology with numerous practical applications, AI has come a long way and is sure to continue to shape our world in the years to come.